It’s important to promote good research in health care, particularly if it’s relevant to policy or patient care. It’s equally important to disclose limitations of that research, and, if you’re a journalist or act like one, the good folks at Health News Review will let you know when you’ve failed to do so.

A research letter in JAMA Internal Medicine by Michael Wang, Mark Bolland, and Andrew Grey illustrated just how frequently papers, press releases, and news stories mention limitations of observational studies. Spoiler: It’s relatively uncommon that they do so. No wonder the Health News Review crew is so busy!

Wang et al.

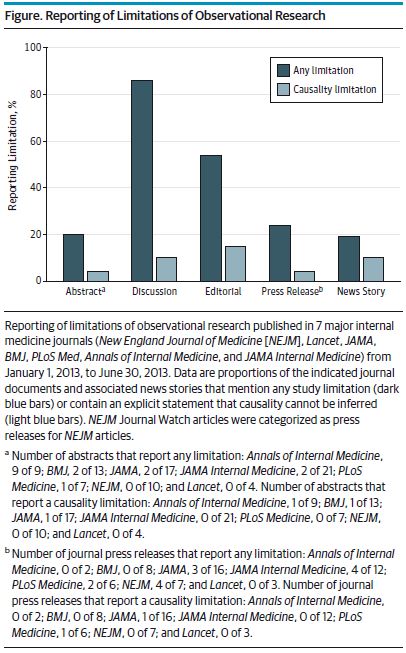

collated 81 prospective cohort and case-control studies with clinical outcomes published between January 1, 2013, and June 30, 2013, in the Annals of Internal Medicine, BMJ, JAMA, JAMA Internal Medicine, Lancet, New England Journal of Medicine, and PLoS Medicine; 48 accompanying editorials; 54 journal press releases; and 319 news stories generated within 2 months of publication. […] For each of the resulting 583 documents, [they] determined whether any study limitation was reported and whether there was an explicit statement that causality could not be inferred.

Their figure below illustrates the proportion of the various means of reporting results that discuss limitations. Nowhere does mention of limitations of causality rise above about 17%. Is that a problem?

Maybe not. The reason is that Wang et al. used a very strict definition of “limitation”. Their point of view is that observational studies have a fundamental “inability to attribute causation.” Therefore, they looked for statements that “causality cannot be inferred.” That’s too strong.

All causal inferences rely on assumptions. The assumptions required of observational studies are, in some senses (depending on application), stronger than those for randomized trials, but quite frequently a causal inference is reasonable, especially with enough probing of those assumptions. What I’d look for in describing limitations is not a statement that causality cannot be inferred, but a statement about under what assumptions it can be, and disclosure of threats to those assumptions. (More about this here and here, for instance.)

Nevertheless, limitations should be acknowledged. Sometimes they really are too severe for reasonable interpretations of causality. About that, Stephen Soumerai, Douglas Starr, and Sumit Majumdar have published a worthwhile primer on how to spot inappropriate causal inferences, with examples from studies past and news media reporting about them.

They draw from the stories of postmenopausal hormone replacement therapy and benzodiazepine use and hip fractures in the elderly—two examples where early (and biased) observational work drove practice in the wrong direction. Other, similar episodes discussed include those of flu vaccine’s effect on mortality and electronic health records’ impact. Here, they demonstrated improbability of some observational study findings with falsification tests (though they don’t use that term).

Ultimately, the authors recommend interrupted time series (of which, difference-in-differences is one variant) as a means of more plausible causal inference with observational data. Other approaches, not discussed, are also worthwhile, in my view, but require more training and care. I don’t fault the authors for not including them in what is intended to be a relatively easy read.

I recommend you take a look.