I’m writing this piece mostly to save myself time on Twitter and email. Every time I write about something like diet or nutrition, people start sending me messages on how I must have missed “this” or “that” study that proves me wrong.

The problem with using only epidemiologic data is that it’s sometimes easy to find a study in the scientific literature that supports any belief. So if you want to be “proven” right, you can certainly cherry pick to prove your point.

There’s no better example of this than a classic 2012 systematic review (also recently highlighed at Vox) that was published in the American Journal of Clinical Nutrition. They selected 40-50 common ingredients and searched the literature for studies linking them to cancer risk. Of the 264 published studies, more than 70% found an association with a risk of cancer, some higher and some lower. When meta-analyses were published, though, the results (increased or decreased) were more conservative.

That’s cause the outliers are more likely to get published. They’re also more likely to get media attention. But they’re usually that – outliers.

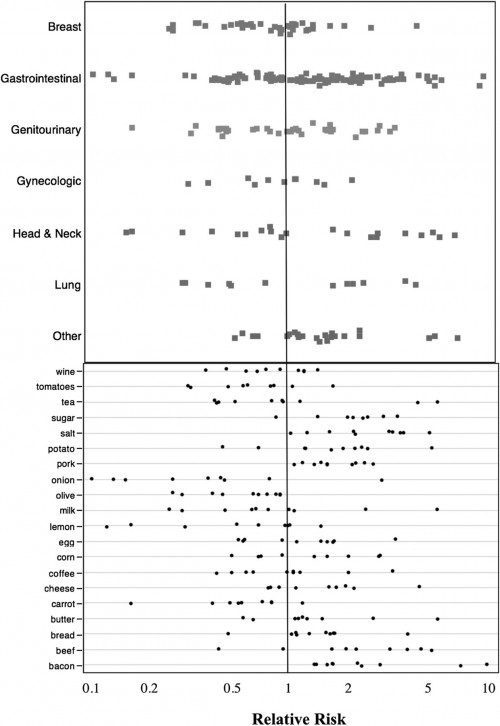

Look, I can’t make the point any better than Figure 1:

Any dot to the right of 1 means a study found that the food led to a higher risk of cancer. Any dot to the left found that the food was associated with a lower risk of cancer. The top half groups the studies by location of cancer. The bottom half groups them by type of food.

The take home message is that everything seems to both cause and prevent cancer. If your goal is to confirm a belief you have, you will have no trouble finding a study in the literature to “prove” that anything either causes or prevents cancer.

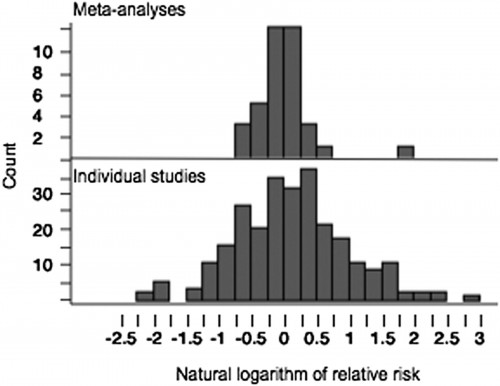

Meta-analyses tell a different story, though:

While there is a large distribution of individual studies around a wide variety of risks, the meta-analyses are grouped pretty tightly around “no effect”. That’s cause for most things there either really is no effect, or the actual effect is pretty small.

Now this doesn’t mean that there aren’t going to be things associated with cancer. As I acknowledge in my Upshot piece, a 2013 meta-analysis found that people who eat a lot of red meat had a 29% relative increase in all-cause mortality. But even then, you gotta dig into the details. Most of this was driven by processed red meats (ie bacon, sausage or salami), and you had to eat at least 1-2 servings (with an “s”) of red meat a DAY to be considered eating “a lot”. And it’s still epidemiological data.

So you can accuse me of many things in what I’m putting on the Upshot, but I think telling me I’m “cherry-picking” is somewhat misplaced. I’m specifically using systematic reviews and meta-analyses to avoid that. When you throw me a single study and say that proves me wrong… that’s “cherry picking”.