Sometimes clinical trialists collect data but fail to publish the results. I know, because I am one of these bad trialists.

It happens remarkably often. Hiroki Saito and Christopher Gill randomly selected 400 RCTs registered at the ClinicalTrials.gov website. They found that

Among the 400 clinical trials, 118 (29.5%) failed to achieve PDOR [public disclosure of results] within four years of completion. The median day from study completion to PDOR among 282 studies (70.5%) that achieved PDOR was 602 days (mean 647 days, SD 454 days).

Christopher Jones reports similar results here. The non-publication of a significant proportion of trials skews the evidence base, particularly if failed trials are less likely to be published.

Failure to publish is also a significant ethical problem. Jones notes that

The non-publication of trial data also violates an ethical obligation that investigators have towards study participants. When trial data remain unpublished, the societal benefit that may have motivated someone to enroll in a study remains unrealized.

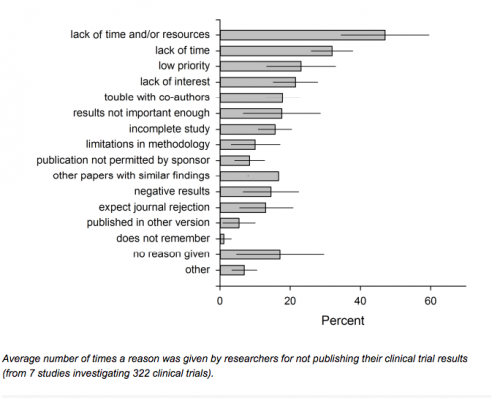

If it’s a publish or perish world, why do so many fail to publish? Roberta Scherer and her colleagues in the Journal of Clinical Epidemiology (see also here) did a systematic review of the reasons given by trialists for not publishing their results. Her findings were even more discouraging than Saito and Guest’s.

Results

The mean full publication rate was 55.9% (95% CI, 54.8% to 56.9%) for 24 of 27 eligible reports providing this information, and 73.0% (95% CI, 71.2% to 74.7%) for 7 reports of abstracts describing clinical trials. 24 studies itemized 1,831 reasons for non-publication, and 6 itemized 428 reasons considered the most important reason. ‘Lack of time’ was the most frequently reported reason (weighted average = 30.2% (95% CI, 27.9% to 32.4%)) and the most important reason (weighted average = 38.4% (95% CI, 33.7% to 43.2%)).

I love this conclusion:

Conclusions

Across medical specialties, the main reasons for not subsequently publishing an abstract in full lies with factors related to the abstract author rather than with journals. (emphasis added)

It’s the authors, not the journals. “Lack of time” is a completely unsatisfying explanation: no one has more or less than 24 hours in a day. The trialists who did not publish had other priorities for their time.

But if large groups of trialists have the wrong priorities, we should ask whether the trialists are in situations that reinforce the wrong priorities. Here’s how it looks to me.

Academic medical centers (AMCs) care only about the revenue you generate, either from patient care or from winning grant competitions. This has two adverse effects. On the one hand, many AMCs steal time from clinicians by requiring them to see patients on time that is actually paid for on grants. On the other hand, AMCs do not care much about publishing. They care some because publishing more will help you win grants. But that’s the only reason they care. If you can get another grant without publishing anything from the last one, they are happy. It’s not really a publish or perish world. You can publish a lot and still perish.

Grants are under budgeted and within grant budgets, resources for data analysis and writing are notoriously under budgeted. Grant writers propose to spend the last six months of their funding on analysis and writing. This is a joke, but grant review panels routinely look the other way, because everyone is telling the same joke. People also make optimistic assumptions about how quickly they can recruit patients and how much they will have to spend per patient. (In my unpublished RCT, I was off by a factor of five. It’s a long story…) Finally, the NIH routinely makes across the board cuts in grant budgets, because Congress has been cutting NIH’s budget. The net effect is that many trialists expend their funds on data collection and have inadequate funds to support data analysis or writing.

It’s really hard to make yourself write when the trial fails. And most trials do fail, either because they do not recruit enough patients or because the intervention has no effect. My unpublished trial involved a web-based technology for improving psychiatric patient follow up. The tech worked fabulously. The doctors who tried it were enthusiastic about it. The problem was, I could never convince more than a handful of them to try it. Trying and failing to sell them was among the most dispiriting experiences of my life. I’m not afraid that the data can’t be published. It just hurts to write about them. I have been working on a paper for some time; but it’s been very difficult to see the manuscript through to completion.

This post is a commitment mechanism. Having gone public with my problem, I will finish this. You are my witnesses.

My point, though, is that the incentives surrounding clinical trialists do not support publication. We need stronger incentives and they may need to be punitive. NIH Director Francis Collins has some ideas here.