My highly refined radar for policy-relevant facts is pulling in a new signal. OK, I admit, the radar has another name: Sarah Kliff. She reports that a new market survey from consulting firm Oliver Wyman* found that,

[w]hen asked to choose between paying a penalty and purchasing coverage, 76 percent of the uninsured said they’d rather purchase coverage. That would reduce the number of people without insurance to 5 percent of the population and have 25 million Americans purchasing through the exchanges, just slightly higher than the 24 million that the CBO projected.

Kliff finds this simultaneously surprising and not. I agree with her. My gut says, “Wow!” and my brain says “Duh!” Maybe the “wow” part comes from months (and months, and months) of gloom and doom about how health reform will play out. Maybe I was starting to believe it just couldn’t work. To be fair, this survey does not prove it will. It only illustrates that at least the uninsured are receptive to it.

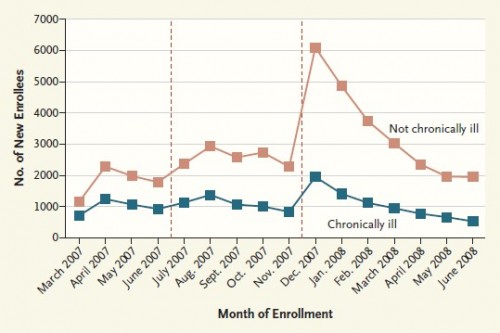

But, here’s the “duh” part. As Kliff notes, Massachusetts, under a state law similar to the ACA, has a high take-up rate. More than that, there is evidence that the mandate had a lot to do with the law’s success. As the following figure from an early 2011 paper by Chandra, Gruber, and McKnight shows, even though premium subsidies were available to low-income individuals in the Massachusetts exchange in early 2007, it’s the mandate that fully kicked in by the end of 2007 that caused a big spike in enrollment. So, the mandate matters.

Subsidies matter too, and the ACA will provide them to a broader range of income groups (up to 400% of the federal poverty level) than does the Massachusetts law (up to 300% of the FPL). Finally, the penalty for not complying with the mandate will be higher under the ACA than in Massachusetts. So, all signs point to substantial participation by the uninsured.

The last “duh” is that this is, in fact, the principal aim of the law. A lot of work went into crafting something that would appeal to and assist the uninsured. In that light, it really isn’t all that surprising that it appears as if it will do just that.

* Anybody know if the firm has released methods for their survey?

UPDATE: Asked about survey methods.