Registering a study means specifying, in a public database like ClinicalTrials.gov, what the study’s sample criteria are, what outcomes will be examined, and what analyses will be done. It’s a way to guard against data mining and bias, though an imperfect one. A boost for trial registry was provided in 2004 by the International Committee of Medical Journal Editors (ICMJE) when it mandated registration for clinical trials published in member journals, listed here.

Publishing a study means having it appear in a peer-reviewed journal. Few people will ever look at a trial registry. Many more, including journalists, will read or hear about published studies. So, what gets published is important.

Not everything gets published. Many studies have examined trial registration incompleteness and selective publishing of registered data. [Links galore: 1, 2, 3, 4, 5, 6, 7, 8]. Perhaps as many as half of trials for FDA-approved drugs are unpublished even after five years post-approval. This is concerning, but what does it really mean? Does it imply bias? If so, is that bias different by funding source (e.g., industry vs non-industry)?

Trial registry data can be changed. That weakens the de-biasing, pre-commitment role registration is supposed to play. But sometimes changes are reasonable. After all, if you haven’t done any analysis yet and you think of a better way to do it, it’d be dumb to just blindly keep going with your registered study. You should do it the right way, and you should change your registered approach. However, changing registry data after the study is done, e.g. to match what you did, is a lot more sketchy (or could be). All changes in ClinicalTrials.gov are stored, so one can try to infer whether its being gamed.

A study examined changes in ClinicalTrials.gov registered data for 152 RCTs published ICMJE journals between 13 September 2005 to 24 April 2008. It doesn’t make the registry look very good. The vast majority (123) of examined trials had changes in their registries.* Most commonly changed fields were for primary outcome, secondary outcome, and sample size. The final registration entry for 40% and 34% of RCTs had missing secondary and primary outcomes fields, respectively, though more than half of the missing data could be found in other fields. Already that’s a concern because it makes the registry hard to use if data are missing or in the wrong place. (I want to emphasize here that I’m not blaming investigators for this. Maybe they deserve the blame. But maybe the registry is also hard to use. I’ve never used it, so I cannot say.)

The study found that registry and published data differed for most RCTs including on key secondary outcomes (64% of RCTs), target sample size (78%), interventions (74%), exclusion criteria (51%), and primary outcome (39%). Eight RCTs had primary or secondary outcomes registry changes after publication, six of which were industry sponsored. That’s concerning. But six or eight is a small number relative to all trials examined, so let’s not freak out.

Another study looking at all ~90,000 ClinicalTrials.gov-registered interventional trials as of 25 October 2012 assessed the extent to which registry entries had primary outcome changes and when changes were made, stratified by study sponsor.* It found that almost one-third of registered trials had primary outcome changes, changes were more likely for industry-sponsored studies, and industry sponsorship was associated with changes made after study completion date. I think we should be at least a bit concerned about that. (Again, maybe there are perfectly reasonable explanations, but it warrants some concern.)

What gets registered? When we’re talking about trials aimed at FDA-approval, there are different types.* There are pre-clinical trials in which drugs are tested, but not in humans. Then there are several phases (I, II, III) of clinical trials that ramp up in terms of number of humans in which the drug is used and change in relative emphasis in looking for safety vs. efficacy. (As you might imagine, safety is emphasized first.) Post-market trials (phase IV) look at longer-term effects from real-world use. Because trials cost money, it’s likely that drugs that make it to later trials tend to be more promising (i.e., are more likely to provide positive more effects).

From a set of registered trials, only a subset of which are published in the literature, how does one assess publication bias? The easy way is to look at the subset of matched published and registered trials to see what registered findings reach the journals. Do they skew positive? The hard way seems impossible: What about studies that are registered but never published? Do those harbor disproportionately negative findings? We can’t really know, but there’s a clever way to infer an answer.

If I’m not mistaken, pre-clinical trials are also called the NDA phase, for new drug application, which examine new molecular entities (NDEs). In the NDA phase, drug manufactures are required to submit all studies to the FDA. I infer, from what I read, that this is not true of other phases. Therefore, the NDA (or pre-clinical?) phase offers a nice test. Which subset of results sent to the FDA get published? We might infer that the estimate applies to other trial phases, those for which we can’t see a full set of results.

A study of all efficacy trials (N=164) for approved NDAs (N=33) for new molecular entities from 2001 to 2002 found that 78% were published. Those with outcomes favoring the tested drug were more likely to be published. Forty-seven percent of outcomes in the NDAs that did not favor the drug were not included in publications. Nine percent of conclusions changed (all in a direction more favorable to the drug) from the FDA review of the NDA to the paper. Score this as publication bias. And don’t blame journal editors or reviewers: the authors wrote that investigators told them studies weren’t published because they weren’t submitted to journals.

But is this an industry-driven bias? A Cochrane Collaboration review examined and meta-analyzed 48 published studies from 1948 (!!!) through August 2011 on the subject of whether industry-sponsored drug and device studies have more favorable outcomes, relative to non-industry ones. Industry-sponsored studies were more likely to report favorable results and fewer harms.

This sounds like industry-sponsorship might produce a bias, but it could be that industry just tends to look at more favorable drugs, and does more late-phase trials.

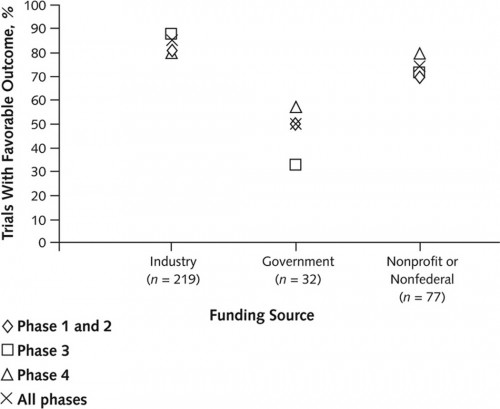

Another study looked at this. It examined 546 ClinicalTrials.gov-registered trials of anticholesteremics, antidepressants, antipsychotics, proton-pump inhibitors, and vasodilators conducted betweeen 2000 and 2006 to assess association of funding source with favorability of published outcomes. Industry-funded trials were less likely to be published (32% for industry vs 56% for non-industry). Among the 362 (66%) of published trials, industry-sponsored ones were more likely to report positive outcomes (85% for industry-, 50% for government-, and 72% for nonprofit/non-federally-funded). Industry-funded trials were more likely to be phase 3 or 4, so maybe that explains higher favorability of findings.

Nope. Industry-funded outcomes for phase 1 and 2 trials were more favorable as well (see chart below).

Another study, however, found no association of funding source with positive outcomes. It looked at 103 published RCTs on rheumatoid arthritis drugs from 2002-2003 and 2006-2007.

A study looked at the extent to which ClincalTrials.gov-registered studies were published.* Its sample of 677 studies drew from trials registered as of 2000 and completed by 2006. Just over half the trials were industry sponsored, with 18% government- and 29% nongovernment/nonindustry-sponsored. Industry-sponsored trials were less likely to be published than nonindustry/nongovernment ones (40% vs 56%), but there was no statistically significant difference compared to government-sponsored trials. In fact, NIH-sponsored trials were only published 42% of the time.

I think that’s worth emphasizing: We should be suspicious of all publication oddities and omissions, not just those that are associated with industry. A lot of NIH-sponsored findings—most of them—never see publication too.*

* As Aaron has reminded me, not all ClinicalTrials.gov-registered studies are for drugs or devices. Many non-industry studies, for example, concern aspects of health care delivery that post far less risk to patients. It may not make sense to analyze these alongside those for drugs and devices, which place patients at higher risk. It also may matter less if such studies change their registries, publish all their findings, or are even registered at all. In other words, given constraints on investigator resources, we might reasonably hold drug and device trials to higher standards than others.