From Justin Dimick and Andrew Ryan:

The association between policy changes and subsequent outcomes is often evaluated by pre-post assessments. Outcomes after implementation are compared with those before. This design is valid only if there are no underlying time-dependent trends in outcomes unrelated to the policy change. If clinical outcomes were already improving before the policy, then using a pre-post study would lead to the erroneous conclusion that the policy was associated with better outcomes.

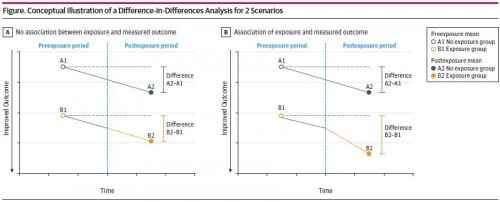

The difference-in-differences study design addresses this problem by using a comparison group that is experiencing the same trends but is not exposed to the policy change. Outcomes after and before the policy are compared between the study group and the comparison group without the exposure (group A) and the study group with the exposure (group B), which allows the investigator to subtract out the background changes in outcomes. Two differences in outcomes are important: the difference after vs before the policy change in the group exposed to the policy (B2 −B1, [see] Figure [click to enlarge]) and the difference after vs before the date of the policy change in the unexposed group (A2 −A1). The change in outcomes that are related to implementation of the policy beyond background trends can then be estimated from the difference-in-differences analysis as follows: (B2 −B1) −(A2 −A1). If there is no relationship between policy implementation and subsequent outcomes, then the difference-in-differences estimate is equal to 0 (Figure, A). In contrast, if the policy is associated with beneficial changes, then the outcomes following implementation will improve to a greater extent in the exposed group. This will be shown by the difference-in-differences estimate (Figure, B).