Many people are contrasting the Oregon study—which didn’t find statistically significant effects of coverage on biomarkers associated with physical health—with the new Massachusetts study—which found a statistically significant mortality benefit of coverage. How could these two findings coexist in a rational world? There are various hypotheses, one of which is that the Oregon study was underpowered (too small a sample size) to find physical health effects, as we’ve documented. (There are so many posts on this. Here’s just one.)

Just to illustrate the difference in power of the two studies, I did a thought experiment. What if we presume that the same mortality effect that was found in the Massachusetts study applied in the Oregon case? Would the Oregon study have been able to detect it with statistical significance?

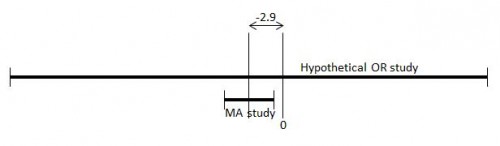

The answer is no, and it’s not even remotely close. The Oregon (OR) study had a sample size about a factor of 100 below that of the Massachusetts (MA) study. That means the error bars would have been about 10 times larger. Here’s what that looks like:

The MA study found that mortality associated with the MA health reform was 2.9% below that of the study’s control group. The 95% confidence interval is from -4.8% to -1%. This is illustrated with the lower bar in the figure, marked “MA study.” The center of that bar is 2.9 units below 0, as indicated. The error bars do not overlap zero. This is a statistically significant effect.

Now look at the hypothetical OR study “result.” The error bars are huge, about 10 times bigger than those of the MA study, overlapping the origin by a country mile. The 95% confidence interval runs from -29.9% to 16.1%. Such a result would not be statistically significant and, for good reason, no such thing was published. There was insufficient power (sample) to find anything on mortality that we didn’t already know. Such a finding would leave us scratching our heads as to whether health insurance improved mortality by nearly 30% or was 16% more likely to kill people, or something in between. I think it’s safe to say that most people have no trouble believing that if health insurance does anything, it’s effect is somewhere in the range of 30% more likely to save lives and 16% more likely to kill people.

I am not suggesting it’s not worthwhile to compare the MA and OR study results. I’m just saying we should be mindful of the tremendous differences in sample, as well as statistical methods and regional contexts, of the two studies. Burn the above chart into your head. If that fails, consider a tattoo.