A new paper in JAMA Internal Medicine by McCrum, Joynt, Orav, Gawande, and Jha delivers good news about hospital quality ratings. Of course, it’s wonky good news, so come with me into the weeds and I’ll explain.

In an accompanying commentary Smith and Shannon begin with Los Angeles County’s experience with publicly reported restaurant quality ratings. Hospitalizations for food-related illnesses fell 20% after LA restaurants were required to display a quality-based letter grade in their front windows. Could the same happen for hospitals?

There are many reasons to be skeptical. One of them is health care is far more complex and varied than is the delivery of a restaurant meal. (For one, the nature of the outcome depends far on many more consumer (patient) factors.) As a consequence, even if consumers were motivated to use quality information, they might reasonably be worried that the few measures available aren’t applicable to their condition. As Smith and Shannon write, “Does it matter how a hospital does on cardiac surgery when you are going in for hip replacement surgery?”

The study by McCrum et al. suggests that it does matter. Using Medicare data of discharges from 2,322 hospitals during 2008-2009, they examined the extent to which hospital performance on the three publicly reported, 30-day mortality measures — for heart attack, heart failure, and pneumonia discharges — predict mortality for other medically and surgically treated conditions. This gets at the question as to whether top performing hospitals are optimizing over what’s being measured and observed (akin to teaching to the test) or whether high quality on a few mortality measures is indicative of high quality more generally.

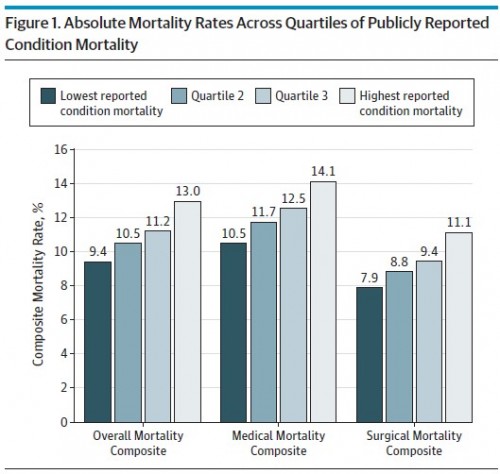

Or, put more simply, can “good” and “bad” hospitals be identified with a few mortality measures? The following two figures from the paper illustrate that they can. In Figure 1, hospitals are grouped by quartile of a statistic that blends the three publicly reported mortality measures into one. Separately for all conditions, medically treated conditions, and surgically treated conditions, the figure shows mortality by these quartiles. High performing hospitals — those in the lowest quartile of mortality based on reported measures — also have low mortality rates overall and by medical and surgical conditions. Vice versa for low performing hospitals. (Controls for patient comorbidities are included in the analysis.)

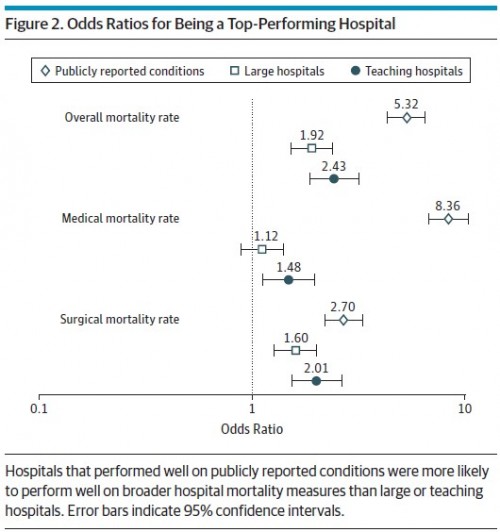

Figure 2 shows that top performing hospitals (those in the lowest quartile of mortality based on the publicly reported measures) have over five times better odds of being top performers on overall mortality rates and over eight times better odds of being a top performer for medical mortality rate. The results aren’t as dramatic for surgical mortality, for which a publicly reported top performing hospital has a 2.7 times better odds of being a top performer. Of note, the signal from the publicly reported mortality rates is stronger than is that of large or teaching hospital status, which consumers might otherwise take as indicators of quality.

A hospital’s 30-day mortality rates for Medicare’s 3 publicly reported conditions—acute myocardial infarction, congestive heart failure, and pneumonia—were correlated with overall hospital mortality rates, even in clinically dissimilar fields. Hospitals in the top quartile of performance on the publicly reported mortality rates had greater than 5-fold higher odds of being top performers for a combined metric across 19 common medical and surgical conditions, translating into absolute overall mortality rates that were 3.6% lower for the top performers than for the poor performers. Finally, performance on the publicly reported conditions far outperformed 2 other widely used markers of quality: size and teaching status. […]

Our results suggest that there may be substantial value in efforts to engage and empower patients to use publicly reported hospital performance to make informed choices regarding where to seek care, irrespective of the condition that brings them to the hospital.

Of course it is hard to engage and empower patients to pay attention to clinical quality. However, to the extent we can, the results of this study are good news. They suggest there is a genuinely valuable signal in the reported mortality measures, one that does discriminate hospital quality more generally than in the dimensions they specifically measure.