In 2012, NEJM published a randomized study of how physicians use financial conflicts of interest (COI) disclosures, by Aaron Kesselheim and eight others.

From the paper’s introduction/background and that which it cites, we learn the following:

- Echoing my struggles in this area, COI disclosure to clinical trial participants may not help them because they cannot evaluate its relevance, among other reasons.

- A systematic review of researchers attitudes about COI, published in 2005, concluded that COIs act subconsciously, that their disclosure doesn’t eliminate bias or change the quality of research.

- Most physicians say they would discount study findings from sources viewed as “conflicted,” but in one study COI information didn’t affect self-reported likelihood of prescribing a new drug.

- Several other studies found the opposite, that COI disclosure leads physicians to discount trial findings.

- Other studies found that doctors’ COI disclosure can enhance trustworthiness of patients in their doctors.

We should pause and recognize that this collection of work suggests that COI disclosure can have completely different effects on confidence in doctors and findings, depending on the study and, in particular, the population of focus (physicians vs. patients). Such disclosure may be like a box of chocolates, in the Forest Gumpian sense. As such, and in light of point 2, claims that disclosure comes any where near systematically addressing the bias that such conflicts may create, or our interpretation of them, are suspect.

The Kesselheim study is most relevant to points 3 and 4, and its findings support the latter. The researchers provided 269 American Board of Internal Medicine-certified physicians with abstracts of hypothetical research on made-up drugs for hyperlipidemia (“lampytinib”), diabetes (“bondaglutaraz”), and angina (“provasinab”), said to have been recently FDA approved.* The abstracts varied in drug, funding source (no mention, NIH, or one of the top 12 global pharmaceutical companies), and three levels of methodological rigor. Each participant received three abstracts at random but such that they varied across the full ranges of the last two of these dimensions.

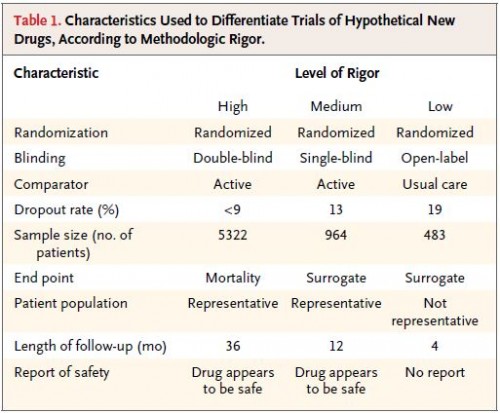

Since “methodological rigor” is vague, let’s be clear what the researchers meant by their three levels of it. This chart tells you all you need to know:

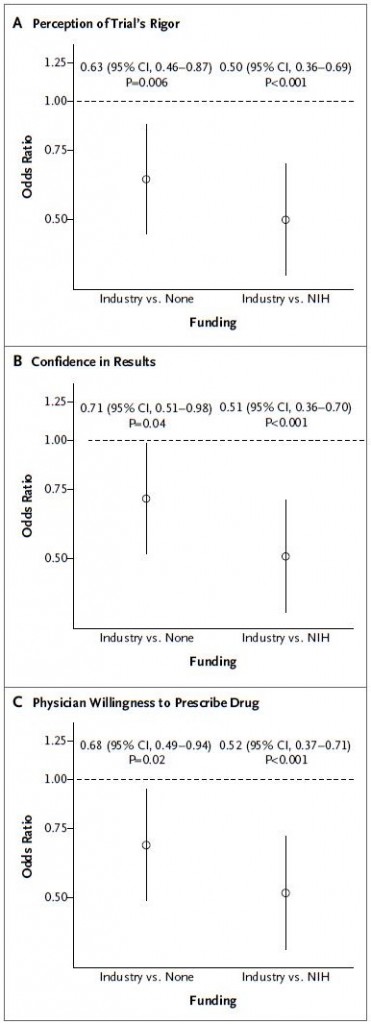

The study results show that the participants differentiated and understood the levels of methodological rigor. Controlling for rigor, industry-funded results were viewed as less credible and actionable than those funded by NIH or without indicated source of support. The following charts, with results adjusted for methodological rigor, tell the story.

Do these results reflect rational behavior? Here’s how they might: there are aspects of a study’s quality that, in today’s research reporting environment, are hard for a reader to assess. These include the withholding of critical data, failing to publish negative findings, or paper ghostwriting. The authors mention all these potential problems with citations to work documenting controversies in these areas with respect to industry-funded work: for data withholding see this, this, and this; for publication bias see this and this; for ghostwriting see this. To the extent that these problems are more pervasive in industry-sponsored work than that with other funding, it’s rational to downgrade the former accordingly (though precisely by how much is unclear).

However, we must acknowledge that citing some examples of problems with industry-sponsored work does not, itself, demonstrate that bias, on the whole, is more common under that kind of funding, let alone by how much. A key danger in assuming that other types of sponsorship are not accompanied by significant conflicts is that it could lead to the wrong policy solution. For instance, would more publicly-funded trials help? It’s possible, but can we say how much more credible they’d be, beyond our own intuition based on anecdotes? This is an important question. (Please understand, I am not against more publicly funded trials.)

This points directly to some other ways to alleviate the concerns COIs raise, and not just for industry-sponsored studies but for all studies: beef up research reporting. The authors conclude,

Financial disclosure is important, but more fundamental strategies, such as avoiding selective reporting of results in reports of [all] trials, ensuring protocol and data transparency, and providing an independent review of end points, will be needed to more effectively promote the translation of high-quality clinical trials — whatever their funding source — into practice. [Note: I substituted “all” for the authors’ “industry-sponsored” in this quote. Given how the quote ends, it seems more in keeping with what they intended.]

In the near future, Bill and I intend to write more about what we might do to beef up research reporting in these and other ways. We think it’s the right way to take COI seriously.

Addendum: For irony’s sake only, I looked at the study authors’ COI disclosures. Three of the nine authors reported having received compensation for speaking/appearances or data monitoring/analysis activities from Merck, Novartis, Astra Zeneca, Genzyme/Sanofi, or PhRMA. It is not my view that this in any way reduces the credibility of the study, which was supported by a grant from the Edmond J. Safra Center for Ethics at Harvard University, a career development award from the Agency for Healthcare Research and Quality, and a Robert Wood Johnson Foundation Investigator Award in Health Policy Research, a fellowship at the Petrie–Flom Center for Health Law Policy, Biotechnology, and Bioethics at Harvard Law School, and a grant from the National Cancer Institute.

* My main disappointment is that I was not invited to the meeting at which these fictitious drug names were cooked up.