We’ve all heard about Oregon’s Medicaid experiment, but there was another one recently that deserves a bit of attention too: Wisconsin’s.

Unlike Oregon, Wisconsin did not randomize people into eligibility for Medicaid. But it did do something that makes it a good target for study. In January 2009 and in Milwaukee County, the state automatically enrolled 12,941 childless adults with incomes below 200% of the federal poverty level (FPL) into BadgerCare Plus Core Plan, a less generous version of the state’s standard Medicaid program, as described by Thomas DeLeire and colleagues in a recent Health Affairs paper.

The Core Plan’s benefit is similar to, but less generous than, Wisconsin’s existing Medicaid/Children’s Health Insurance Program (called BadgerCare Plus). Unlike BadgerCare Plus, the Core Plan has a restrictive drug formulary covering mostly generic drugs; includes dental coverage but only for emergency services; and has no coverage for hearing, vision, home health care, nursing home, or hospice services. Reimbursement for physical, occupational, speech, and cardiac rehabilitation is provided for a limited number of visits. The Core Plan does not cover inpatient mental health and substance abuse services, and it covers outpatient mental health services only when provided by a psychiatrist.

The research candy here is that enrollment was automatic. This potentially goes a long way toward addressing confounding due to self-selection. This is not as good as a randomized design. There is no group randomized to control to serve as a comparison. So we don’t know for sure what the counterfactual is. In cases like this, there are two choices, compare pre-enrollment with post-enrollment for the same group of people or cook up a comparison group (e.g., a matched set of similar individuals not subject to the policy). Both are fine approaches, though each has limitations. In social science, you usually can’t have it all!

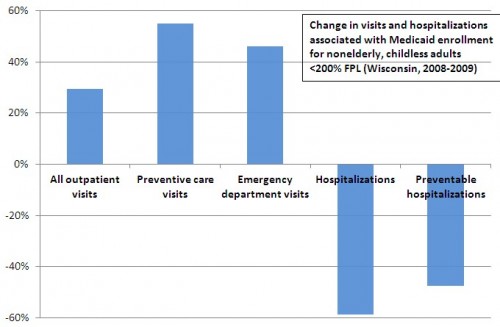

That’s the set-up for the DeLeire et al. study, which is a pre-post design. Of the 12,941 automatic Core Plan enrollees, they were able to match pre- and post-enrollment encounter and claims data for 9,619 of them. Then they assessed how coverage was associated with changes in utilization of various types of care. Though they provide more detail in their paper, my chart below from a subset of their reported results provides an overview.

As you can see, outpatient, preventive care, and ED visits went up. This suggests better access to care. Other results point to potential limitations of the existing delivery system and the nature of the Core Plan benefit. There was also an almost 40% increase in ED visits for conditions that could have been addressed in less intensive settings (not shown in the chart). Also not shown in the chart was an increase in ED visits for mental health or substance use issues of 344%. Clearly some of these conditions could be better and more efficiently addressed in other settings.

The results also show big reductions in hospitalizations and, importantly, hospitalizations that are preventable with outpatient care. This suggests the possibility of cost offsets, though the authors did not assess costs in the study. Also unstudied were changes in health conditions. Core Plan enrollees are using a different mix of care. But are they in better health?

Other key points:

- The “vast majority” of Core Plan enrollees were transitioned into an HMO 2-5 months after enrollment.

- At the time of the study Milwaukee County had a (since discontinued) General Assistance Medical Program that reimbursed hospitals and other providers for care for the indigent at Medicaid rates. Presumably many of those automatically enrolled in the Core Plan could or did receive care financed by this program when they were uninsured or for care that Core Plan didn’t cover.

- It wasn’t clear to me from the paper (or I missed it) how the state identified the automatic enrollees. Was it upon encounter with the health system? Without knowing more about the selection process, it is hard to know for sure how well the study side-stepped confounding. Enrollment upon encounter or encounter of a certain type (e.g., ED visits) would bias the findings in a way that other types of enrollment methods wouldn’t.

Notwithstanding its limitations, this is an interesting paper on an important policy shift. We don’t see too many natural experiments like this, so we should exploit them when they occur and take note of studies that do so.