About a month ago, I wrote a post congratulating Sharon Begley for her well reasoned reporting on a paper in Health Affairs that argues that the extra money we spend on cancer care is worth it because it buys us increased survival time. I also wrote a follow up post here.

On Monday, the authors posted a reply to criticism on the Health Affairs blog. I have no idea if they’re responding to my criticism, but I wanted to address some of their points anyway. Here we go:

First, some critics have argued that we should have looked at mortality rather than survival. That is, we should have measured how many people have died from cancer, rather than how long people who are diagnosed with the disease live with it. There is an important distinction, as we note later. Some have argued that survival estimates suffer from a “lead-time” bias because the United States was differentially diagnosing cancer earlier.

Our Paper Looks At Mortality Estimates As Well As Survival Estimates

This criticism is somewhat puzzling since we also examine mortality trends in our paper, and the same story holds. As discussed both in the main paper and an extensive technical appendix, we examined trends in cancer mortality rates over a similar period – 1982 through 2005 – using the WHO Cancer Mortality Database. The mortality results for the United States for the most controversial cancers (prostate and breast) fell relative to the EU. The results implied that if the US had progressed in its cancer care at the same rate as the EU over this period, there would have been 87,000 additional breast cancer deaths and 222,000 prostate cancer deaths. These correspond to a gain in life expectancy of 1.8 years for prostate cancer patients and 0.8 year for breast cancer—similar to our survivorship analysis. The bottom line is that looking at mortality also supports our finding of a widening gap between the United States and the European countries we investigated.

I wish they had addressed Don McCanne’s published concerns about that appendix:

Yet the data provided in the Appendix on eleven malignancies showed that four did not reach statistical significance at a p value of 0.05, three actually showed additional deaths incurred in the US, and of the four that supposedly showed additional deaths avoided, two — prostate cancer and breast cancer — are known to manifest significant lead-time bias plus overdiagnosis because of the very large numbers that would not lead to unfavorable outcomes. This hardly supplies support for their decision to dismiss lead-time bias.

Their results were dependent on the data in the Appendix, yet the errors in it suggest that it was not critically reviewed. The text includes the phrases, “prostate cancer and death cancer,” “139 prostate cancer deaths averted per 100,000 breast cancer patients,” “a prevalence of 2,400,000 prevalent prostate cancer cases,” and “a gain in life expectancy of survival of 0.8 year for breast cancer patients.” (Statistical survival time cannot be added to life expectancy because of lead-time bias.)

The Appendix is essential to their results, yet it now seems to be discredited. Therefore, their conclusion on the dollar value of survival gain is not validated.

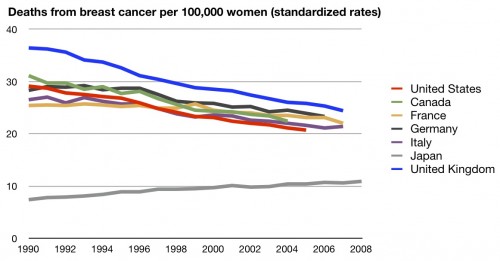

Even so, I’ll concede that we may have lower mortality rates than many other countries when it comes to breast cancer. I’ve posted this before:

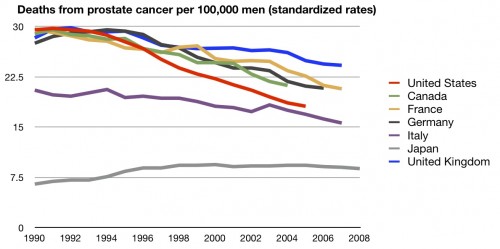

I’ll even concede prostate cancer:

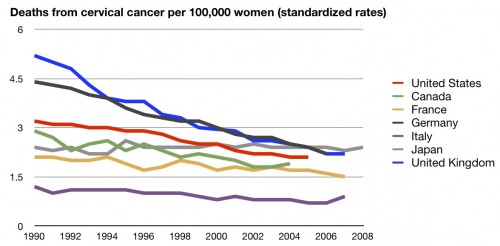

But they are cherry picking. Here’s cervical cancer:

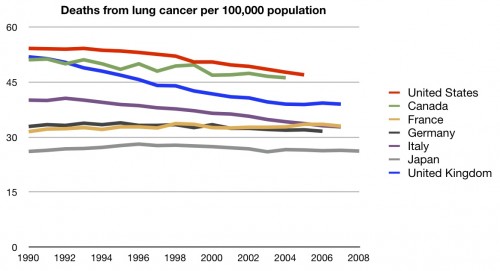

Here’s lung cancer:

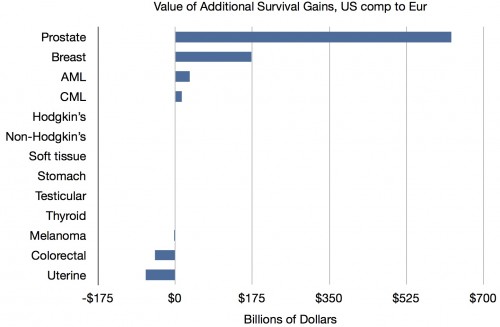

So they’re focusing on cancers where we do better and, to a lesser extent, ignoring those where we don’t. Moreover, the differences in mortality rate aren’t nearly as big as the differences in survival rates. If they’d done their calculations on these differences, the cost might not have appeared to be worth it. Here again are their data, using survival rates, showing how much they are cherry picking:

Almost all the gains are from prostate and breast cancer. Cherry picking. And there’s a reason we may look better for those cancers. It’s lead time bias. They disagree:

So why did we analyze survivorship rather than population cancer mortality? Naturally, mortality and survivorship convey equivalent information if one conditions on diagnosis. However, at a population level, mortality also depends on the number of people who get cancer each year (incidence). So mortality – unlike survival – is sensitive to underlying trends in behavior affecting cancer that have little to do with the health care system. For example, if the United States stopped smoking at a greater rate than the EU, we would expect mortality to fall, but we should give credit to public health efforts rather than health care delivery. Furthermore, mortality analyses can itself be subject to bias due to changes over time in attribution of cause of death.

Ultimately though, people with cancer—and their physicians—are most concerned about their survival chances once they are diagnosed. While mortality rates in the population may be a focus of epidemiological research, they are not the statistics of greatest interest to those diagnosed with cancer. Once diagnosed, a patient and her physician care more about how long she will live—hence our choice of survival as the primary endpoint. Put another way, researchers do not abandon measuring survival in oncology trials because there is a well-recognized issue of attrition bias. The bottom line here is that one wants to compare differences in health care treatment, survival and not mortality is the appropriate outcome.

Lead-Time Bias Is Not A Plausible Explanation For Better US Survival Rates

As I have argued, survival is absolutely of use when a doctor is talking to a patient. They have that correct. The first question a patient will ask when diagnosed is “how long have I got”? But that doesn’t mean it’s appropriate for cross country comparisons. If you diagnose a cancer earlier in one country than in another, almost by definition survival time is increased, even if they die at the same rate at the same time. Survival estimates absolutely will be subject to lead time bias when there are different methods of diagnosis. And, as I’ve said before, in the US the American Cancer Society recommends women are screened by mammography every year starting at age 40; in the UK, women are screened every three years starting at age 50. It’s almost certain women in America are going to be picked up earlier. This will absolutely increase survival time, even if it prevents no deaths.

Again, look at the chart above from their data. We have massive screening programs for prostate cancer and breast cancer, but not for most of those other cancers. Do you really think that has nothing to do with the massive differences in results between those two cancers and the others?

Go read their whole response so you can rest assured I’m not leaving something out. But they still didn’t address what I consider to be the most damning criticism. Nothing in their study – nothing – proves that increased spending accounts for the differences in survival. Was it a randomized controlled trial? No. Can we know anything about causality? No.

This study showed that survival rates are increased for two cancers that are massively screened for in the US. Then it declared, in the manuscript’s discussion, that these differences are likely due to the increased health care spending in the US, specifically citing pharmaceuticals. (Side note – you wouldn’t know that this research was funded in part by a pharmaceutical company unless you could access the gated manuscript). And then in the title, they imply this spending is “worth it”.

That’s far from proven. Even after their response.

(h/t longtime reader Brad Flansbaum)